Unveiling Google Search Console’s Crawl Stats: A Hidden Gem for SEO Professionals

In the realm of SEO, the tools wielded by industry experts can significantly impact how effectively a website is indexed and ranked. One such tool that often flies under the radar is Google Search Console’s “Crawl Stats.” For those navigating large-scale websites, understanding this feature can unlock critical insights into Googlebot’s crawling behavior, a necessity often overlooked in standard SEO practices.

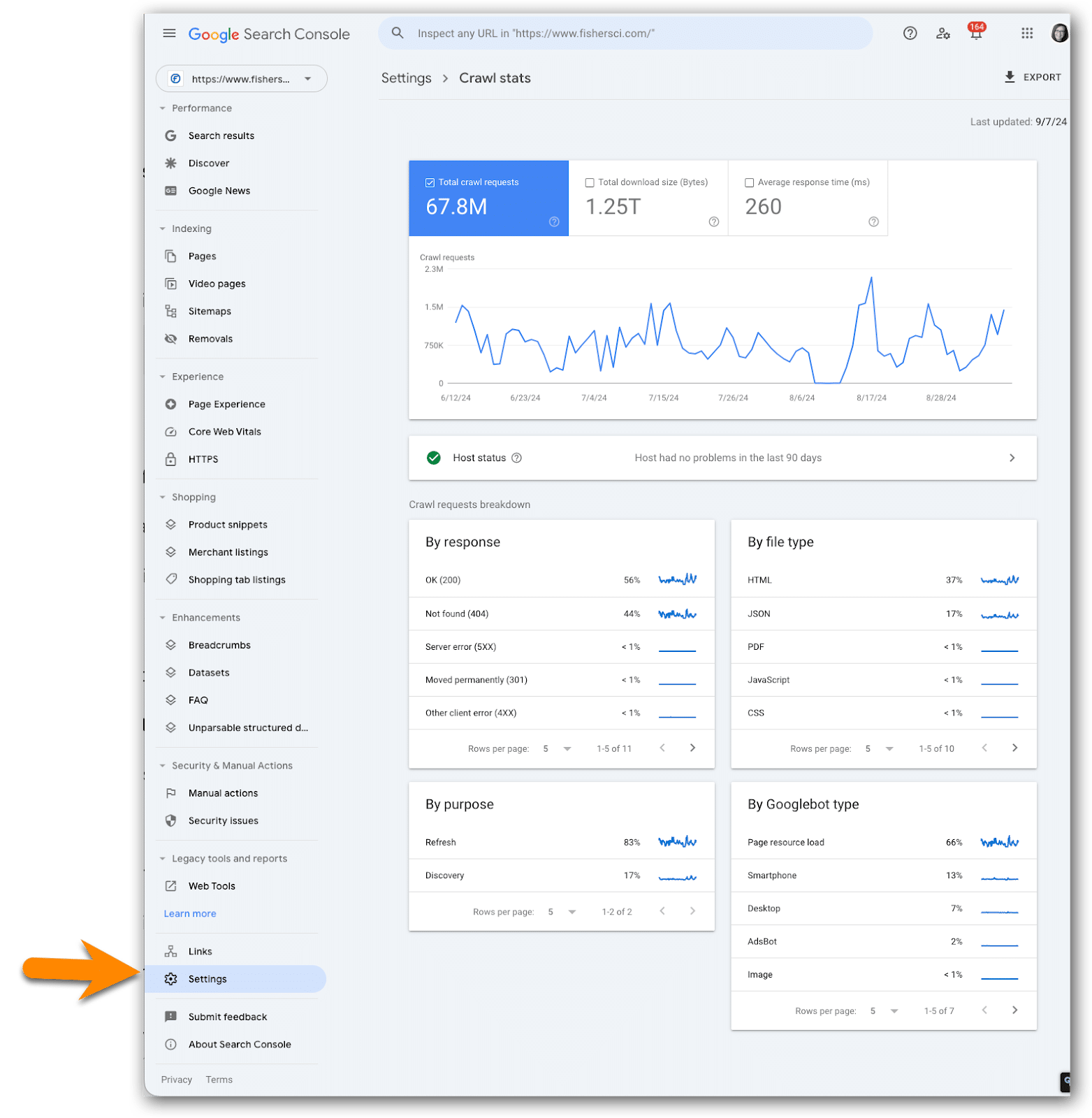

Accessing Crawl Stats

To tap into the wealth of information provided by Crawl Stats, users should first navigate to the Settings section within Google Search Console and access the Crawl Stats report. This report presents a high-level overview of Googlebot’s activity on a site, featuring intricate data that advanced users might find invaluable due to the possible complexities involved.

The Value of Crawl Stats for Enterprises

This report isn’t just a luxury for larger organizations; it can serve as a lifeline for enterprise-level SEO professionals who may lack access to sophisticated crawling and monitoring solutions. For small websites, while the immediate need for monitoring crawler activity might be less pressing due to adequate crawl budget allocations, keeping an eye on these metrics can still provide competitive advantage in the long run.

Key Metrics Worth Monitoring

It is essential for professionals to focus on fluctuating metrics, such as HTML request anomalies, download sizes, and response times. These can yield insights pivotal to strategic decisions, especially when noticeable irregularities arise. Consistent monitoring—ideally monthly, particularly post significant website updates—can provide a clearer picture of how Googlebot interacts with site changes, allowing for proactive optimization.

Logging and Tracking for Long-term Success

Given the limited timeframe offered by Google’s reports, creating a log of Crawl Stats data in a spreadsheet is highly recommended. This allows professionals to track crucial metrics over time, such as the total crawl requests and average response time, fostering informed discussions with developers when issues arise. The historical context can guide strategic SEO initiatives effectively.

Integrating Crawl Stats with URL Management Strategies

Furthermore, while analyzing crawl data, it becomes vital to consider its intersection with URL shorteners and link management. URL shorteners can streamline the crawling process and enhance the tracking of link performance. By logging these insights alongside crawl data, professionals can gain a broader understanding of how URL structures affect SEO outcomes. Tools like #LinksGPT and #UrlExpander can aid in this process, optimizing link effectiveness and traffic flow.

In conclusion, SEO professionals and digital marketers are encouraged to develop a systematic approach to monitoring Crawl Stats. With the prospect of unveiling further insights in follow-up articles, leveraging this often-overlooked feature could yield significant ROI in SEO strategies.

#SEO #GoogleSearchConsole #CrawlStats #BitIgniter #LinksGPT #UrlExpander #UrlShortener

Want to know more: Read More